Error 0x800F081F when adding features on Windows Server 2016 on Azure Virtual Machine

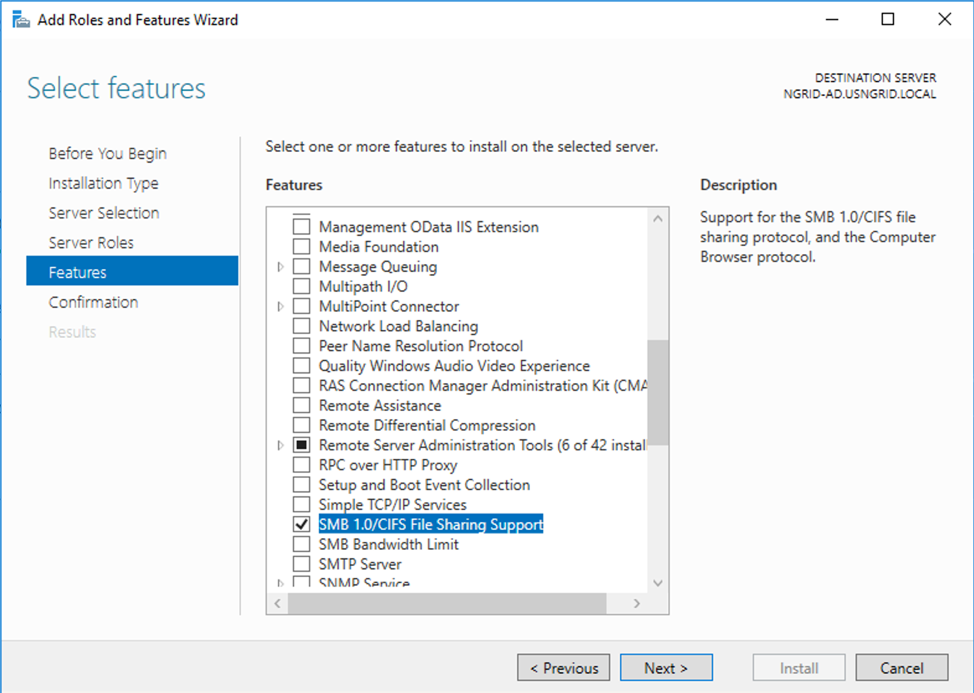

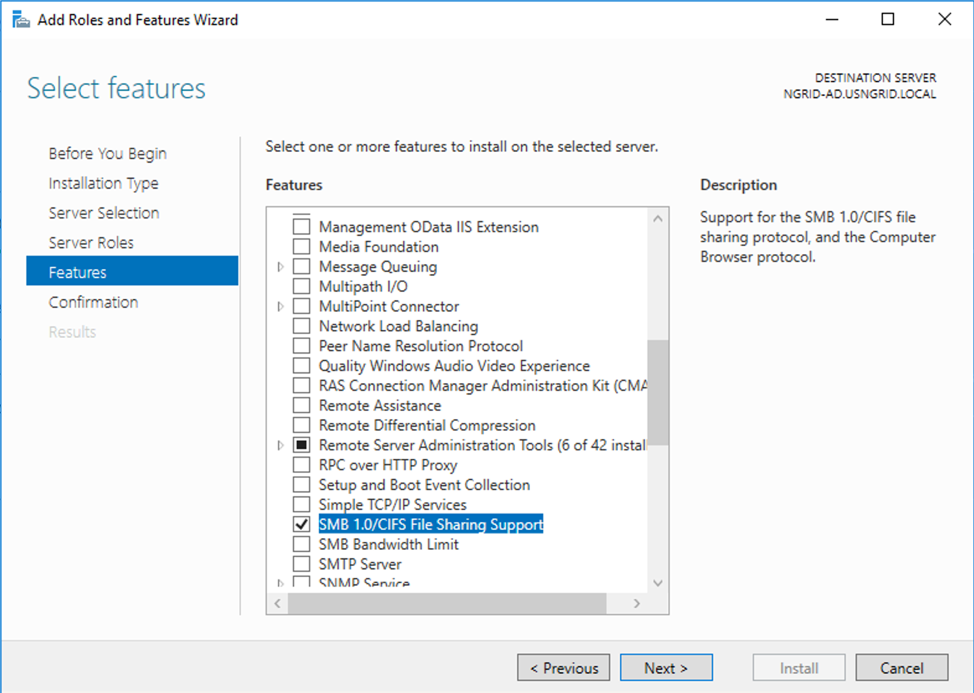

At one of my costumers, I needed to add the SMBv1 feature to enable communication with a legacy File Server running on Windows Server 2003.

A collection of 74 posts

At one of my costumers, I needed to add the SMBv1 feature to enable communication with a legacy File Server running on Windows Server 2003.

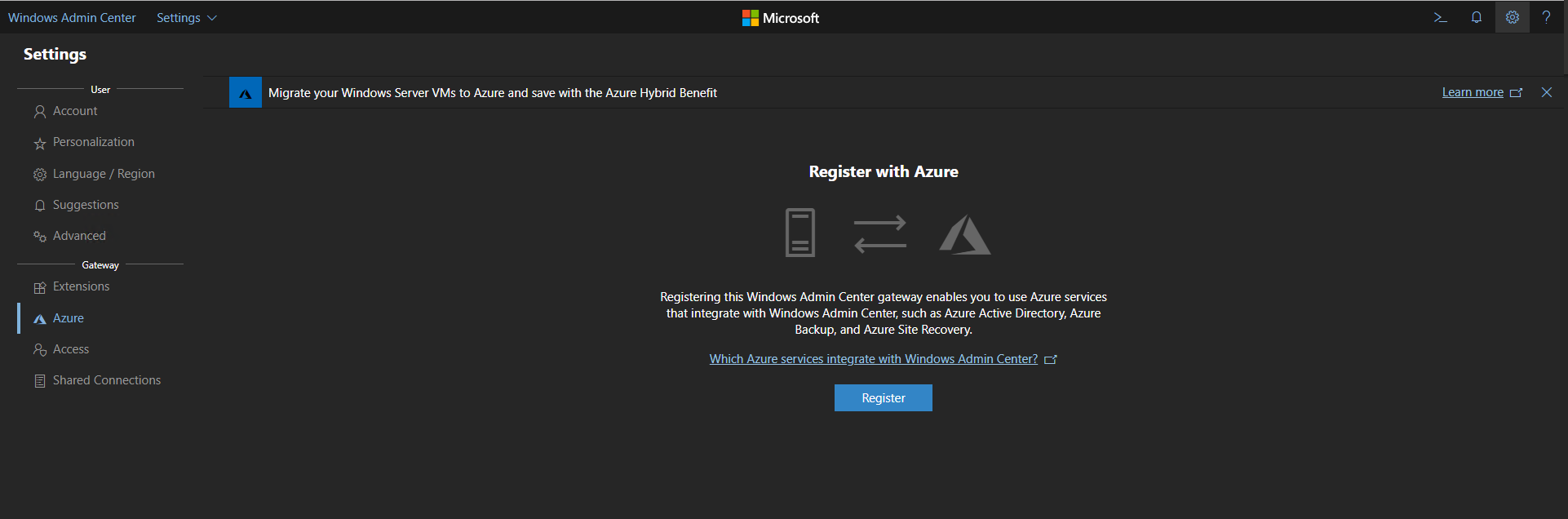

When I start a project with a new costumer and the conversation ends how to manage your workloads either On-Premises or on Azure, I always like to add the Windows Admin Center (WAC) as a topic on managing the workloads....

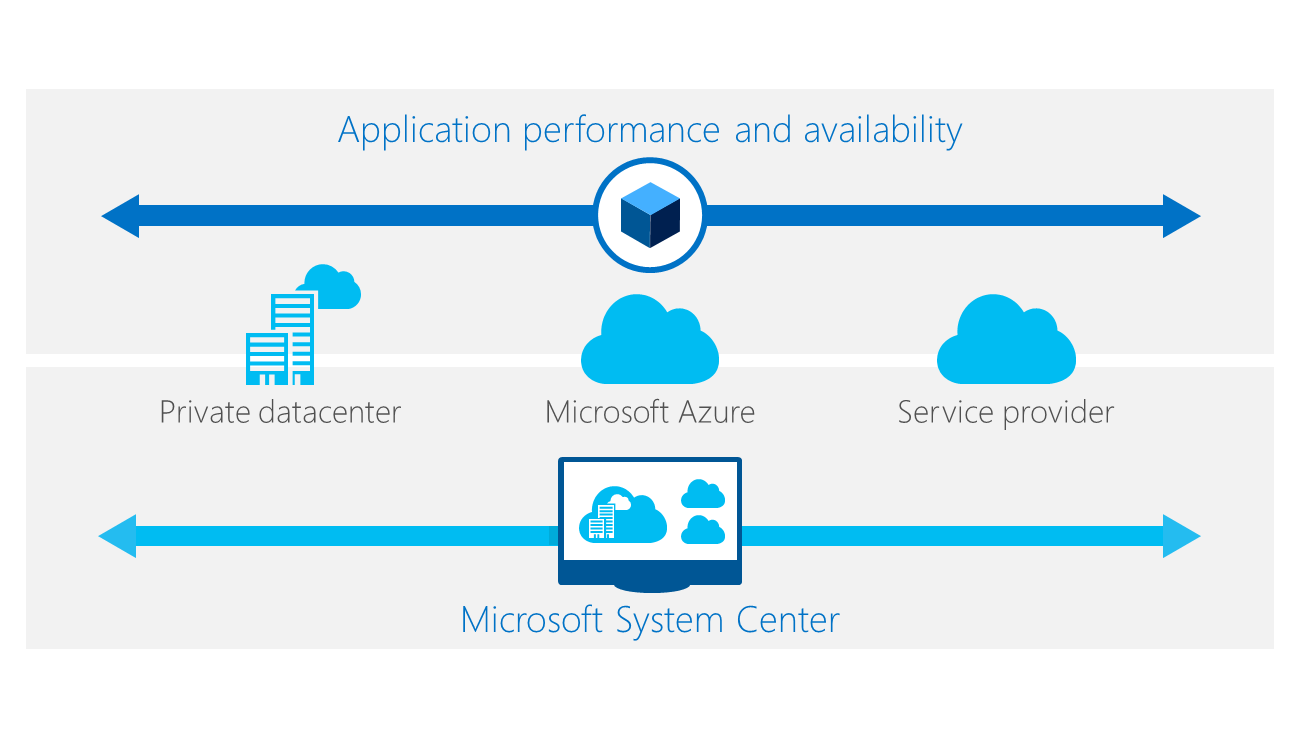

On this series, I want to explore all the situations that you might encounter when you are moving your workloads to the cloud, specially to Azure.

On this series, I want to explore all the situations that you might encounter when you are moving your workloads to the cloud, specially to Azure.

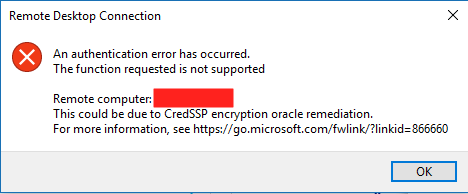

After patching all the Windows Server in Azure, a colleague calls me in panic, because their users, could not access their VMs through RDP. They were getting a CredSSP error (picture bellow).

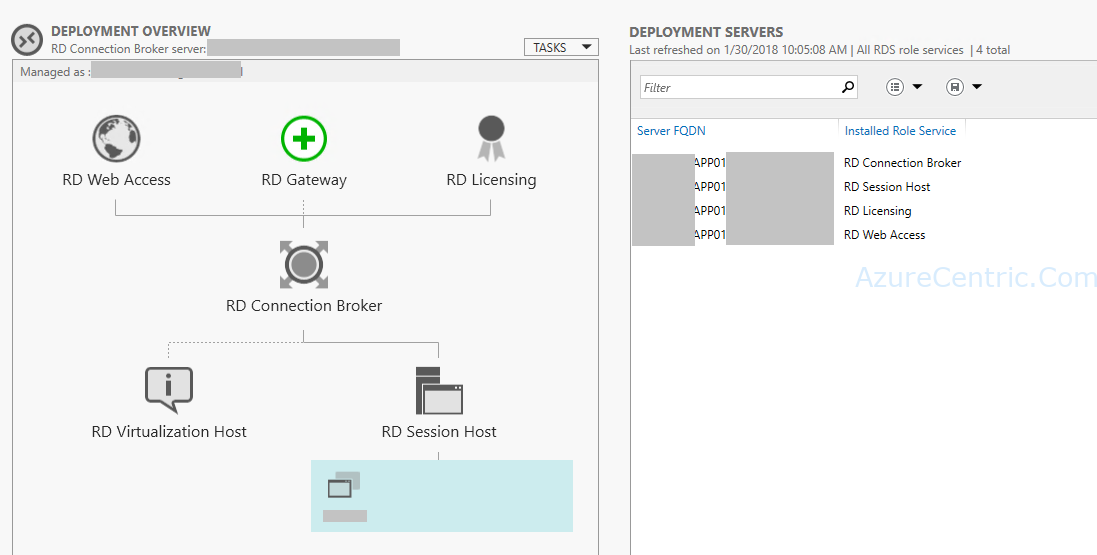

In some organization, it’s common practice to have a jump server in Azure to be used, specially when you have several tools that need to run “locally” on the server. Usually this machine is on a segregated network and not...

At a local community event, after my presentation I was answering some questions and one of the attendee ask me if Azure Cloud Shell is the same tool as Azure CLI.

While I was helping a costumer creating a Azure RM virtual machine from an existing VHD, I adapt one of my existing scripts, with some search on internet, to improve my script, that I normally used to create Azure RM...

WOW! What a day for me! Microsoft Azure just announces new and improved features on the new version Azure Backup Server. Let’s start!

On the previous post (see here), I talked about the concept of Containers, Azure Container Service and Azure Service Fabric. Now that you know the concept and have an idea how to implement it, let see how can you deploy...

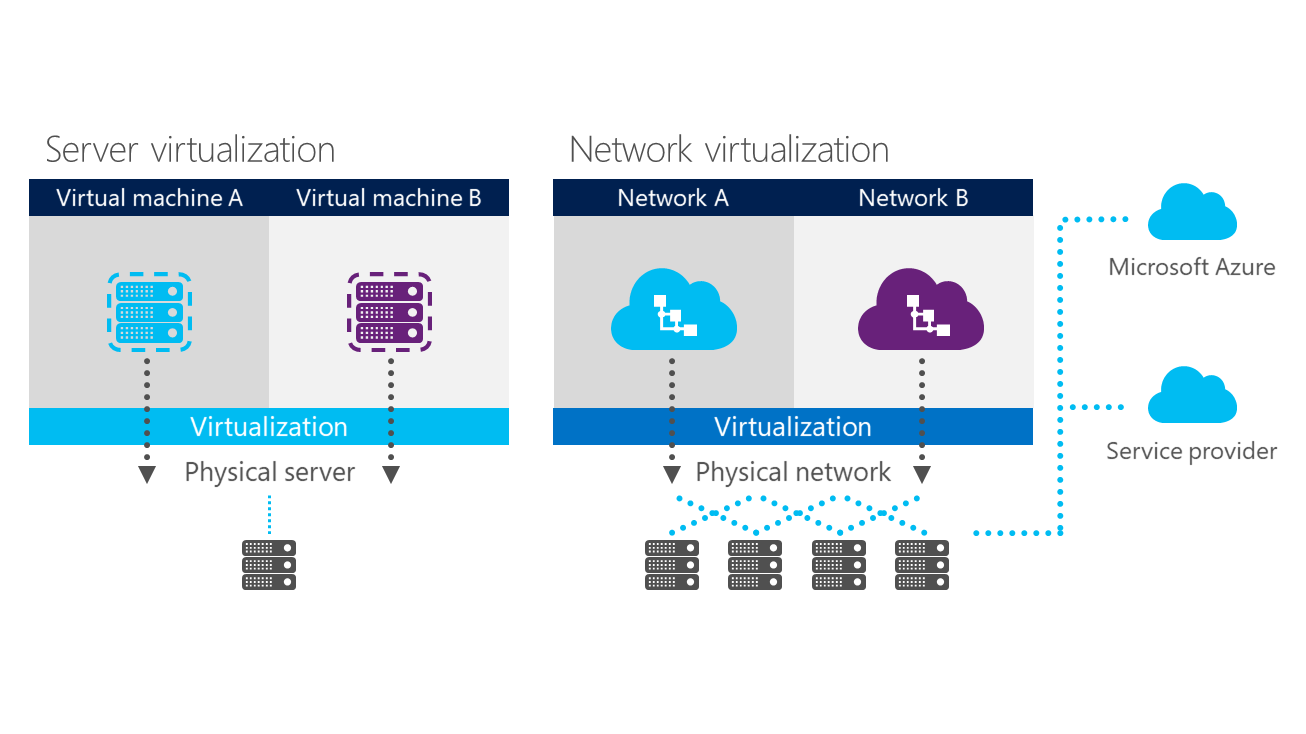

In the last decade, hardware virtualization has drastically changed the IT landscape. One of many consequences of this trend is the emergence of cloud computing. However, a more recent virtualization approach promises to bring even more significant changes to the...

You can deploy a Service Fabric cluster on any physical or virtual machine running the Windows Server operating system, including ones residing in your on-premises datacenters. You use the standalone Windows Server package available for download from the Azure online...

Azure Site Recovery (ASR) is by far one of the Azure features that I always like to play with. The improvement along the time is above extraordinaire! When I’m talking about Azure and Cloud to costumers I always ask what...

We know that Microsoft Azure offers several technologies that help to keep your data secure in use, in transit, and at rest. But what additional security measures can we implement to increase the security of my virtual machine? One of...

There are several methods to deploy virtual machines on Azure. One of my favorite is using PowerShell. Using PowerShell to deploy VMs, gives you the ability to automate the process and be more efficient.

Once you have created an Azure VM instance with the default settings, you will be able to connect to it.

Creating a new VM by using the Azure Portal is a relatively straightforward process. However, it involves several steps, which you should be familiar with to implement the most optimal configuration. The first step involves choosing the origin of the...

You can also create Azure VMs by using Azure Resource Manager templates. This option relies on the capability to describe an Azure Resource Manager deployment by using an appropriately formatted text file, referred to as an Azure Resource Manager template....

When you need to create a consist method where you want to give to the end-user, that is consuming resources on your cloud, the power of creating resource like virtual machines, but you want to control lot of the environment...

You can create still classic virtual machines from the Azure portal or by using Azure PowerShell. Although, Microsoft doesn’t recommend because it’s an old platform that they want to move away from it.

This post is a continuation of series of posts about Transform your datacenter. You can see the previous post: Transform the Datacenter – Part 1 Transform the Datacenter – Part 2 – Software-defined Datacenter Transform the Datacenter – Part 3...

I’ve talked about the infrastructure fabric, and how there are enhancements available in Windows Server 2012 R2 on the previous blog post (see here). But how do you think about the services that run on top of that infrastructure? How...

This post is a continuation of series of posts about Transform your datacenter. You can see the previous post here.

_Free online event with live QA with the WAP team: http://aka.ms/WAPIaaS _

Free online event with live Q&A: _http://aka.ms/virtDC

If you have a Hyper-V Cluster connected through iSCSI to a storage solution and sometimes you get errors that your CSV volumes went offline. In some cases, the Windows Failover Cluster is able to recover and bring online automatically and...

In a failover cluster, virtual machines can use Cluster Shared Volumes that are on the same LUN (disk), while still being able to fail over (or move from node to node) independently of one another. Virtual machines can use a...

One of features on Windows Server 2012 R2 that was improved since the last version is network virtualization. Virtual networks are created by using Hyper-V Network Virtualization, which is a technology that was introduced in Windows Server 2012.

On the previous version of Windows Server (prior Windows Server 2012) you have to download and install MultiPath I/O (MPIO). Since Windows Server 2012 MPIO is a feature that you can enable. Because it’s a feature that comes with the...

In some medium/large organizations, it is common practice to have different access levels for systems, such as administrator, help desk, support and auditor. When implementing virtual machine using Hyper-V Servers, it is also important to reflect these access levels as...